Agentic Orchestration on Kubernetes: The Lumiflow Approach

Why Kubernetes Matters for AI

Kubernetes (K8s) isn’t just infrastructure glue—it’s the operating system for modern, scalable software. Originally built to run stateless microservices in resilient clusters, K8s gives you powerful primitives: scheduling, autoscaling, health checks, and rollout control. It’s how enterprises deliver global-scale apps with confidence. But here’s the catch: AI agents aren’t microservices. They’re stateful, adaptive, and unpredictable. They reason in loops, consume dynamic context, call APIs mid-run, and burn compute if left unchecked. Kubernetes gives us the raw muscle to run them. But it doesn’t know how to understand, govern, or contain them.

That’s where Agentic Orchestration comes in—bringing the safety, visibility, and runtime control AI agents need to thrive in production. Lumiflow turns Kubernetes into an agentic platform.

Lumiflow adds a missing layer of intelligence and governance on top of the container substrate—so AI agents can be deployed safely, monitored transparently, and controlled precisely. Traditional orchestration tools assume jobs are stateless and deterministic. Agentic Orchestration flips the script—dealing with autonomy, intent, and reasoning under guardrails.

With Lumiflow, you get a system that lets you:

- Define once, execute anywhere—cloud, on-prem, or edge.

- Control cost, behavior, and access at runtime—not just at deploy-time.

- Observe reasoning paths, not just logs.

- Empower every team—from developers to finance—to ship AI safely.

How Lumiflow Turns Kubernetes into an Enterprise Agentic Platform

Lumiflow isn’t just compatible with Kubernetes—it transforms it into a purpose-built engine for safe, scalable AI execution. Here’s how:

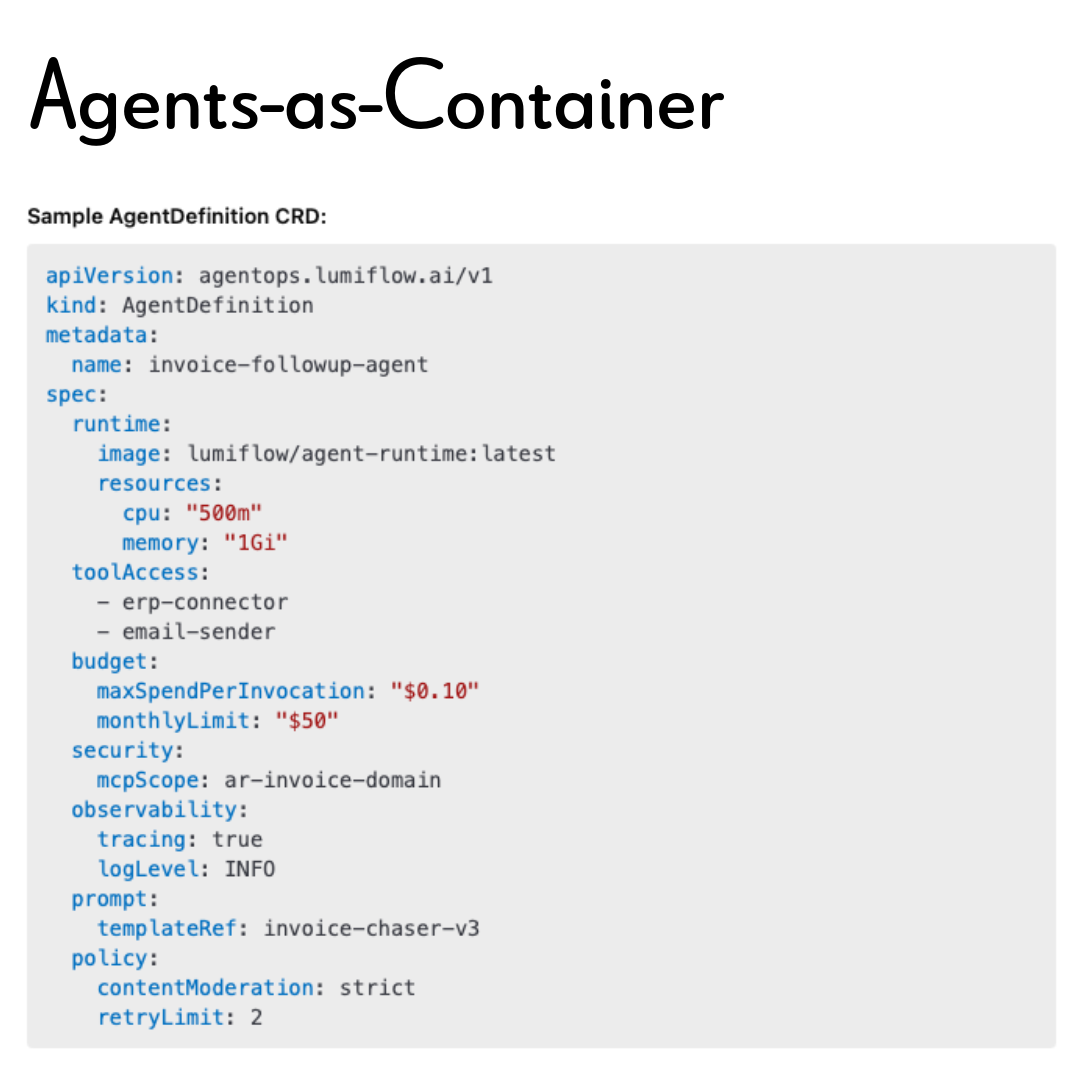

- Declarative Runtime Infrastructure: Every agent, tool, and workflow is defined as a Custom Resource Definition (CRD)—just like native Kubernetes objects. These CRDs are portable, versioned, and Git-native, making agents deployable by both YAML-savvy developers and low-code builders via our visual canvas. One spec, everywhere. No drift. No fragile scripts.

- Sidecar-Enforced Guardrails: Each agent is automatically wrapped with sidecars that enforce real-time policy: budget ceilings, tool whitelists, moderation filters, and telemetry hooks. These guardrails run alongside the agent—not inside it—ensuring compliance and observability even if the agent is dynamically reasoning or being steered by a large language model.

- Model Context Protocol (MCP): Instead of exposing tools and data through ad hoc APIs, Lumiflow uses MCP to dynamically scope access at runtime. Agents only see what they’re authorized to use—no more accidental overexposure or brittle hand-rolled integrations. Policies are declarative, inspectable, and enforceable.

- GitOps-Native Deployment: Prompts, policies, and workflows are treated like code. Developers push to Git, where audit trails, approval workflows, and environment-specific policies kick in automatically. ArgoCD handles the rollout, canarying, and drift detection—so your AI moves at the speed of DevOps, not shadow IT.

- Full Observability for Reasoning Chains: Thanks to built-in OpenTelemetry (OTEL) support, every decision your agents make is logged, traced, and attributed—across tools, costs, retries, and latency. This isn’t black-box magic; it’s fully inspectable AI behavior, with dashboards tuned for developers, security teams, and business owners alike.

- Role-Based Agent Marketplace: The Lumiflow Agent Marketplace provides a shared source of truth for every team. Engineers see tools and CRDs. Security sees scopes and violations. Finance sees cost ceilings and usage breakdowns. Product sees capabilities they can plug into new flows. One platform. Tailored views. No silos.

This Is Agentic Orchestration in Action:

- Spin up a multi-tool reasoning agent from a single spec

- Attach a $0.10/task budget and restrict tool calls by scope

- Monitor it live—see its reasoning chain, decisions, and costs

- Patch the prompt, roll out a new variant via Git, or kill misbehaving pods instantly

We shift the design paradigm:

- From availability → to intent and autonomy

- From stateless jobs → to goal-driven loops

- From scripts → to declarative, observable specs

Lumiflow doesn’t just make AI work in Kubernetes—it makes it safe, governable, and ready for production at enterprise scale. That’s the difference between an AI demo and an AI platform.

The Lumiflow Capability Continuum

AI maturity isn’t a binary state—it’s a layered evolution. Most enterprises won’t leap from simple API calls to autonomous decision-makers overnight. Instead, they move along a continuum of increasing agentic complexity, where each stage demands different infrastructure, governance, and runtime strategy. Lumiflow is built to support this progression—from basic utility to full autonomy—without rewriting your stack at every step.

1. Tool Fabric: The Raw Materials of Agentic Work

Every intelligent system starts with simple actions. In Lumiflow, these are packaged as the Tool Fabric—stateless, policy-governed services that handle atomic tasks like extracting totals from invoices, translating language, scraping web data, or querying enterprise systems. On their own, these tools aren’t “intelligent.” They don’t reason, adapt, or learn. But they are essential—they’re the safe, reliable utilities that agents call upon to do their work. And in complex systems, knowing what an agent can use matters just as much as what it knows.

What makes these tools different in Lumiflow is how they’re governed. Each tool is wrapped in a container of enforcement: observability via OpenTelemetry, runtime scoping through the Model Context Protocol (MCP), and deployment as a CRD—complete with usage budgets, access policies, and SLA expectations. This ensures that agents can only access tools they’re explicitly authorized to use, with guardrails baked in at the infrastructure level. Think of Tool Fabric as an enterprise-grade command palette—a curated set of capabilities your agents can draw from, fully pre-approved, rate-limited, and observable. It’s the first layer where autonomy meets accountability.

2. Digital Workflows: Structured Automation with Logic, Not Learning

With a library of tools in place, the next evolution is Digital Workflows—structured sequences that string those tools together into repeatable automation pipelines. These are built as deterministic DAGs (Directed Acyclic Graphs) using orchestration frameworks like Dagster or Prefect. They follow strict logic: if this happens, do that. Perfect for handling predictable, low-latency tasks like processing a loan application, sending a follow-up email, or triggering a fraud score calculation. They’re highly efficient, easily reusable, and auditable by design—ideal for use cases where clarity and compliance matter more than creativity.

But while these workflows are structured, they aren’t adaptive. They don’t reason, reflect, or revise; they simply follow instructions, step by step. They can automate a lot—but only what you explicitly tell them. That makes them incredibly powerful for known processes, yet brittle in the face of ambiguity or novelty. Think of Digital Workflows as your organization’s macros—automated routines that handle the mundane at scale, but stop short of making real-time decisions. They’re the bridge between tools and true autonomy—reliable, rule-based, and ready to scale.

3. Agentic Workflows: Where Automation Starts to Think

Agentic Workflows mark the turning point from structured automation to adaptive intelligence. Unlike digital workflows that follow rigid paths, these workflows are powered by agents that can reason, reflect, and revise. They’re built using emerging frameworks like LangGraph, CrewAI, and ReAct, which enable agents to operate with memory, branching logic, and contextual feedback loops. Rather than blindly following a script, these agents consider what just happened, evaluate if it’s working, and decide what to do next—often changing course in real time.

This adaptive behavior introduces a new kind of autonomy. Agents in this layer can track memory across multiple steps, enabling more nuanced decisions. They can detect when a step didn’t produce a usable result and retry or reframe the task. And importantly, they can dynamically select which tool to invoke or which question to ask next, based on the evolving context of the task. This moves automation from deterministic execution into a realm where agents can act more like teammates—handling ambiguity, exception cases, and nonlinear problem solving.

Because these agents make decisions at runtime, they also require stronger governance and deeper observability. Their behavior isn’t always predictable—so understanding what happened, why, and at what cost becomes essential. This is where Lumiflow’s CRD-based model, sidecar policies, and OpenTelemetry traces shine. You can track every step in the reasoning chain, correlate it with tool usage and token spend, and apply cost ceilings and retry limits declaratively. You don’t just deploy a thinking agent—you deploy one you can trust.

Think of Agentic Workflows like skilled analysts. Give them a goal—like “triage this customer escalation”—and they’ll chart the path forward based on live inputs, organizational constraints, and accumulated knowledge. They may escalate, resolve, or delegate—but they’ll adapt in-flight. This is where intelligent automation truly begins—less like scripting, more like partnering with digital talent.

4. Super-Agents: Strategic Orchestrators with Autonomy at Scale

At the top of the Lumiflow continuum are Super-Agents—AI systems that don’t just complete tasks, they orchestrate them. These are not single-instance executors; they’re persistent, goal-driven AI managersthat monitor queues, make prioritization decisions, and direct work across agents, workflows, and tools. Unlike traditional bots that react to triggers, Super-Agents operate continuously, adapting their strategy as context evolves. They don’t just work inside a process—they run the process.

A Super-Agent may oversee an entire business function. It could triage and route incoming support tickets, monitor SLAs, and escalate edge cases to specialized agents. It might analyze sales activity across regions, identify pipeline gaps, and coordinate outreach via marketing automation workflows. In regulated environments, it could enforce policy compliance by dynamically reviewing process changes and dispatching audit agents. In each of these scenarios, the Super-Agent becomes a digital conductor—orchestrating the right agents and workflows at the right time, with the right constraints.

This fundamentally transforms how organizations operate. Where human managers once had to parse dashboards, assign work, and follow up manually, Super-Agents can manage these flows continuously and at scale. One Super-Agent can handle the workload of dozens—sometimes hundreds—of staff members, not by replacing expertise, but by automating coordination, context-switching, and execution. And because they operate on governed infrastructure, with full observability and policy enforcement, they can do so with accountability and traceability built in.

Super-Agents are also domain-aware. They’re provisioned with scoped access to relevant tools, context, and history, enabling them to act with continuity and intent. They don’t need to be reprogrammed to handle variation—they evolve their execution strategy dynamically. As business conditions shift, a Super-Agent can pause one initiative, escalate another, or reroute attention to emerging priorities. This is where AI stops being a backend enhancement and starts acting like a layer of operational leadership—always on, always optimizing.

From an organizational design perspective, the rise of Super-Agents reshapes the human-AI partnership. Instead of managing low-level automation, staff begin managing intent, outcomes, and exceptions. Leaders no longer need to dig into every process—they interact with Super-Agents, set high-level goals, and receive strategic summaries, risk flags, and next-best action recommendations. This lifts cognitive load, streamlines workflows, and accelerates execution cycles dramatically.

Metaphorically, Super-Agents are your AI Chief of Staff. They don’t just execute—they delegate, prioritize, and keep the business humming. They abstract away complexity so humans can focus on judgment, creativity, and relationships. And as more capabilities come online—from internal tools to third-party APIs—Super-Agents become the bridge that turns fragmented systems into coordinated, adaptive ecosystems. This is the future of enterprise orchestration—and it’s already here

.

The journey to integrating AI into enterprise systems is fraught with challenges, but Lumiflow offers a comprehensive solution. By transforming Kubernetes into an agentic platform, Lumiflow ensures that AI agents are not only operational but also safe and governable. This approach allows enterprises to harness the full potential of AI, moving beyond simple automation to intelligent orchestration. With Lumiflow, organizations can confidently scale their AI initiatives, knowing that each agent operates within a framework of accountability and control.

The Lumiflow Architecture

Each step along the Lumiflow Capability Continuum introduces new demands—greater autonomy, wider access scopes, stricter budgets, and more nuanced lifecycle management. The difference between a stateless tool and a reasoning Super-Agent isn’t just complexity—it’s operational gravity. As you climb the continuum, the infrastructure required to manage, govern, and trust these systems becomes exponentially more sophisticated.

That’s why Lumiflow doesn’t treat agents as side projects or bolt-ons. It’s designed from the ground up to span the entire spectrum—from atomic tools to orchestrated agent ecosystems—without compromising governance or velocity. You don’t have to replatform to grow. You don’t have to sacrifice safety to scale.

To support this progression, Lumiflow is architected around three intersecting planes of capability:

- A Control Plane, for enforcing policy, setting guardrails, and maintaining organizational visibility.

- A Runtime Plane, to execute agents reliably across cloud, on-prem, and edge environments—with full portability and enforcement.

- And an Experience Plane, built to empower developers, data scientists, low-code teams, and SREs with a unified, intuitive workflow.

These aren’t abstract layers—they’re the operational spine of AgentOps. Together, they unlock a powerful new paradigm where AI moves beyond prototypes and pilots, and into trusted, production-grade autonomy at enterprise scale. Let’s explore each layer in detail.

The Control Plane

Govern, Observe, and Navigate the Agentic Landscape

The Control Plane is where Lumi transforms AI from isolated scripts into safe, observable, and enterprise-grade infrastructure. At the center is the Agent Marketplace—a live, organization-wide catalog of AI capabilities. Every tool, workflow, agent, and orchestrator is declared as a Kubernetes Custom Resource (CRD), making it instantly discoverable, composable, and governed. Whether authored in YAML, TypeScript, or Python—or visually built in a drag-and-drop interface—each Marketplace entry is a versioned, executable object, ready for real-time deployment.

This unified system gives each team what they need. Developers export and promote CRDs via the aops CLI or the low-code builder. Non-technical users can create agentic workflows by snapping together logic from reusable nodes. Security and Finance teams get structured dashboards showing policy scopes, usage trends, and violations—no YAML spelunking required. Everything in the Agent Marketplace is live, auditable, and linked to runtime enforcement.

That enforcement is powered by Lumi’s Policy Engine, combining Open Policy Agent (OPA) with domain-specific guardrails to enforce budget limits, access scopes, and output moderation. Meanwhile, the Observability Bus—built on OpenTelemetry—captures rich traces of every agent decision, cost, and outcome, making black-box reasoning fully transparent. Together, the Agent Marketplace and its supporting control infrastructure make the Control Plane the governance backbone of AgentOps—turning enterprise AI into something you can trust, track, and scale.

The Runtime Plane

Define Once, Execute Anywhere

The Runtime Plane is where Lumi brings agent definitions to life—securely, flexibly, and at enterprise scale. Built on top of Kubernetes, it enables a single agent spec to run seamlessly across cloud (EKS/Fargate), on-prem clusters, or edge environments (via K3s) without modification. Whether your infrastructure spans datacenters, public cloud, or retail edge, Lumiflow ensures agents run with the same governance and guarantees.

At the center of this layer is the Agent Operator—the secret sauce that makes Kubernetes truly agentic. Out of the box, Kubernetes doesn’t understand prompts, tool scopes, cost budgets, or reasoning lifecycles. The Agent Operator fills that gap. It continuously watches for Lumi’s Custom Resource Definitions (CRDs) and reconciles them into safe, observable, and fully governed workloads. It injects sidecars to enforce cost ceilings, moderate content, and emit OpenTelemetry traces. It applies runtime MCP scopes for secure tool and data access, and handles autoscaling, retries, and sandbox isolation with ease.

This isn’t just about standardizing deployment—it’s about standardizing trust. With Lumiflow, you can swap model runtimes (e.g., Bedrock, open weights with vLLM, or NVIDIA Triton) without changing application code. Runtime configurations are abstracted and injected declaratively. Sidecars—including the Budget Enforcer, Guardrail Filter, and OTEL Exporter—are automatically deployed based on policy. And if your org requires custom runtime behavior? Just extend the CRD and define your own sidecars or execution constraints.

The foundation of this architecture is Lumi’s use of Kubernetes Custom Resource Definitions (CRDs). CRDs allow teams to teach Kubernetes about new object types—tools, workflows, agents, orchestrators—and treat them like native resources. This means:

- OpenAPI-based schema enforcement

- Declarative versioning from alpha → v1

- RBAC-guarded promotion and policy review

- Seamless integration with GitOps and Argo CD pipelines

CRDs unify how developers, SREs, and platform teams interact with agents—declaring expectations in code, automating deployment, and preserving auditability across environments. This is how Lumiflow makes “build once, run anywhere” real for agentic software.

The Experience Plane

Build, Test, and Ship Faster with AgentOps

The Experience Plane is where Lumiflow meets the people building and deploying real AI. Designed for full-stack developers, data scientists, low-code builders, SREs, and even policy owners, this layer delivers a unified, opinionated workflow that balances speed, governance, and accessibility that enables true AgentOps.

It all starts with One Spec Everywhere. Whether you’re dragging nodes in the visual builder, writing YAML by hand, or using the Lumiflow CLI, you’re always working against the same typed Kubernetes Custom Resources. There’s no gap between the prototype and the production manifest—developers, security teams, and operators are literally sharing the same source of truth. That eliminates friction, reduces errors, and keeps handoffs clean.

Then comes Fast Prompt Iteration, a game-changer for teams experimenting with LLM behavior. In dev and staging environments, teams can hot-swap prompts or entire reasoning graphs via CRD overrides—no rebuilds, no restarts. But once you promote a variant to production, the system locks it down: the prompt becomes immutable, the image is cryptographically signed, and runtime behavior is frozen for auditability. It’s safe to move fast—and reliable when it counts.

And finally, there’s Policy Autocomplete, Lumi’s built-in defense against accidental misconfigurations. As teams assemble agents, both the Console and CLI suggest valid MCP scopes, budget caps, and guardrail profiles in real-time—ensuring alignment with organizational policy before code ever hits Git. Once merged, Argo CD + Rollouts handle progressive delivery, canary testing, and auto-rollback, keeping clusters consistent and drift-free.

This entire experience across CLI, Console, and Visual Builder—runs on top of the same execution framework, letting teams ship agents with the same rigor, velocity, and visibility they expect from their best-run microservices. It’s DevOps, reinvented for the agentic era.

A Day in the Life of AgentOps using Lumiflow

Today the biggest blocker to scaling AI isn’t model performance; it’s orchestration. Most organizations are drowning in disconnected tools, fragile workflows, and pilot projects that never graduate to production. What they need isn’t another chatbot; they need a system that can turn raw intelligence into coordinated, reliable execution across departments, clouds, and compliance boundaries.

Lumiflow delivers exactly that. By unifying agent development, runtime governance, and organizational visibility into a single platform, Lumiflow enables enterprises to move from scattered automation to fully orchestrated AI ecosystems. What follows is a real-world-based sample of how a large Enterprise uses Lumiflow to manage intelligent agents at scale—across functions, time zones, and trust boundaries: without losing control.

8:00 AM — Global Shared Services

A Super-Agent named GlobalOps-Orchestrator comes online across the enterprise’s hybrid infrastructure. It’s responsible for triaging inbound operational requests—everything from invoice reconciliation to procurement escalations. It pulls from a real-time queue in Azure Service Bus, filters by priority and business unit, and begins delegating work across a fleet of scoped Agentic Workflows. Some run on EKS in AWS, others on K3s at regional data centers closer to ERP and billing systems.

Because every agent is defined as a CRD, the orchestration logic doesn’t need to worry about where they run—just what they do. Each task delegation includes runtime constraints like $0.10 max spend, strict content moderation, and access to only two tools: erp-connector and email-sender. These are pulled from the Tool Fabric, which is versioned and scoped via MCP. All tools are pre-wrapped, budgeted, and observable.

9:30 AM — Finance & Security Review

Over coffee, the Finance Director logs into the Agent Marketplace (Lumiflow’s live capability catalog) to review cost and SLA dashboards. From their role-specific view, they can see each agent’s current burn rate, policy violations, and per-task cost trends. One agent is flagged for nearing its monthly budget—Finance tags it for review. Meanwhile, the Security team has detected a sudden spike in external API calls from a tool inside SalesOps-Agent-v2. They drill into the OTEL trace, identify a new lead enrichment behavior, and raise a request to tighten MCP scope.

Rather than email back and forth, both teams tag the agent’s CRD in GitHub. This triggers a lightweight policy review flow handled through Lumiflow’s GitOps-native pipeline. ArgoCD detects the updated manifest, runs a dry-run in staging, and sends Slack alerts if any guardrail thresholds are violated.

11:00 AM — Developer Collaboration

A full-stack developer and a business analyst are pair-building a new agent in Lumiflow’s low-code visual canvas. The analyst drags in ocr-extractor, contract-matcher, and a GPT prompt node labeled “Legal Summary Generator.” The developer, working in the CLI, syncs the graph to Git where it’s versioned as a new AgentDefinition CRD.

They want to A/B test two different summarization prompts. In staging, they use Lumiflow’s Prompt Override strategy, letting both prompts run side-by-side—no rebuild, no downtime. Real-time traces show which variant performs better across latency, cost, and completeness. Once they pick a winner, a single CLI command promotes it to production—at which point it becomes immutable, signed, and enforced via admission control.

1:00 PM — Executive Strategy Briefing

The COO opens their custom dashboard built on top of Lumiflow’s observability layer. It shows near-real-time KPIs:

- SLA adherence for automated escalations

- Policy violations (none today!)

- Agent-based cost savings vs. human process baselines

- Tasks handled autonomously vs. those flagged for human review

In one click, they can drill down into any Super-Agent’s behavior tree. For VendorOps-Orchestrator, they see which agents it delegated to, what tools were called, how much was spent, and where retry logic kicked in. This chain-of-thought visibility is what makes AI safe in their environment. They’re not just getting output—they’re getting justification.

4:00 PM — SRE & Drift Prevention

The SRE team reviews their daily cluster health report. Thanks to Lumiflow’s sidecar-injected guardrailsand OPA-based policy engine, they know no agent has violated CPU, memory, or budget constraints. One agent shows slight divergence between declared and actual behavior—it started using a tool not in the CRD due to a misconfigured prompt in staging. The Agent Operator flagged it and prevented promotion to prod.

SREs make a minor prompt correction, commit it, and ArgoCD handles the rollout. No fire drills. No late-night patches. Agents are treated like microservices—declarative, observable, and governed.

5:30 PM — Global Scale, Local Control

As teams log off across time zones, Lumiflow keeps humming. Super-Agents in the Americas continue routing tasks through regional K3s clusters to comply with data residency rules. In EMEA, a new AgentDefinition is automatically spun up to assist with invoice anomalies after month-end close. No human intervention needed—just CRDs, policies, and automation.

Across cloud, on-prem, and edge, the entire organization is executing intelligent work at scale—with governance, cost control, and operational clarity.

This is AgentOps in action.

Finally Scaling Intelligence, Safely

What sets Lumiflow apart isn’t just that it can run agents—it’s that it turns agentic behavior into a governed, composable, and production-grade capability across your enterprise. Most AI initiatives stall not because models don’t work, but because the supporting infrastructure can’t keep up. Lumiflow changes that equation by introducing a structured architecture that scales with your organization’s ambition, complexity, and compliance demands.

At the foundation is the Control Plane—your command center for policy, visibility, and safety. Here, organizations define global guardrails using Open Policy Agent (OPA), configure budget ceilings and resource limits, and assign MCP scopes to restrict tool and data access. Security, compliance, and finance teams get a single point of visibility—complete with role-specific dashboards, drift detection, and audit trails. It’s how governance moves upstream—baked into every agent from day one, not bolted on after the fact.

The Runtime Plane brings those specifications to life. Every tool, agent, and workflow is expressed as a Kubernetes Custom Resource Definition (CRD)—a fully typed, versioned contract. Whether running in AWS EKS, on-prem clusters, or lightweight K3s nodes at the edge, agents behave consistently and predictably. Sidecars handle cost enforcement, content moderation, OTEL-based telemetry, and access control—creating a sandboxed runtime for each agent, even when behavior is emergent or unpredictable. MCP ensures agents only see the tools and data they’re authorized to use, dynamically scoped based on context.

On top of it all sits the Experience Plane, which aligns teams around a shared workflow. Pro-code engineers can build and deploy using the CLI. Low-code builders and analysts use the drag-and-drop Visual Builder to compose new agentic workflows. Everyone interacts with the same Agent Marketplace—one live catalog of tools, workflows, agents, and Super-Agents, enriched with role-aware views for Engineering, Security, Finance, and Product. From idea to staging to production, it’s one spec, one workflow, no silos.

This layered architecture is what makes AgentOps real. It lets you treat agents like infrastructure—not just experimental code. You can version them. Test them. Promote or roll them back. Apply policy to them. And most importantly, trust them to execute core business functions in a way that’s observable, compliant, and cost-controlled.

As organizations advance from isolated use cases to full-spectrum intelligence, the complexity of agentic behavior will only increase. Lumiflow meets that complexity with structure. It doesn’t just help you run more agents—it helps you orchestrate them, govern them, and scale them across your entire enterprise.

.png)